Rojunaco – robotics and augmented digital twin for construction

- Project leader: CESI LINEACT

- Call for projects: Métropole Rouen Normandie – Research Program

- Total project budget: €77k

- CESI budget for the project: €77k

- Project launch date: October 1, 2023

- Project duration: 24 months

The ROJUNACO project is part of Construction 4.0 and focuses on research into data recalibration, semantic information retrieval, and the implementation of a proof of concept for automated inspection missions based on digital twins, BIM, robotics, and augmented reality.

Achievements as of March 31, 2025:

During this period, we continued our work on creating synthetic datasets from digital twins and evaluation using the YOLOV5-6D approach for the detection and estimation of 6D object positioning. Several building elements were targeted: doors, windows, and fire extinguishers.

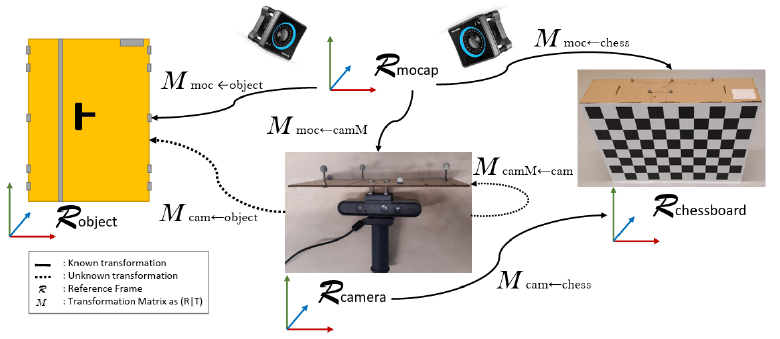

We then turned our attention to creating real self-labeled datasets in the flexible production workshop of the Industry of the Future platform at the CESI campus in Rouen. We focused on one object in particular: the laboratory doors. To build this dataset, we used a camera to which MOCAP (MOtion CAPture) markers were attached to capture the camera’s position in the laboratory’s global reference frame. The positions of the doors were also marked in this same reference frame. Located behind the MOCAP cameras, we created a 2.5-meter aluminum pole equipped with markers to report the position of a few characteristic points of the door in the MOCAP reference frame. Finally, a calibration phase using a checkerboard was necessary to determine the position of the camera’s optical center in the MOCAP reference frame. The entire experimental setup is shown in Figure 1.

Experimental setup for creating real self-labeled datasets

We also questioned the contribution of synthetic data in the training set with regard to model performance. To do this, we want to gradually inject real data into the dataset and evaluate the model’s performance according to three metrics:

- Accuracy of the distance between 3D center points: percentage of translation errors less than 10% of the object’s diameter relative to the centroid of the 3D bounding boxes (ground truth).

- Accuracy of the 2D projection at 5 pixels: Percentage of pixel errors less than 5 pixels relative to the corner of the eighth bounding box of the ground truth of the 3D bounding boxes.

- Accuracy at 25 centimeters/25 degrees: Percentage of translation errors less than 25 cm and rotation errors less than 25 degrees relative to the centroid of the 3D bounding boxes.

This work is ongoing. We plan to showcase it in a scientific paper to be submitted to the IEEE IECON 2025 conference (submission deadline April 30). Acceptance notifications will be sent out on June 30. The conference will take place in Madrid in October.