INHARD : INdustrial Human Action Recognition Dataset

Dans cette page :

We introduce a RGB+S dataset named “Industrial Human Action Recognition Dataset” (InHARD) from a real-world setting for industrial human action recognition with over 2 million frames, collected from 16 distinct subjects. This dataset contains 13 different industrial action classes and over 4800 action samples.

The introduction of this dataset should allow us the study and development of various learning techniques for the task of human actions analysis inside industrial environments involving human robot collaborations.

The dataset is available on GitHub.

Modalities

Skeleton modality

We used a “Combination Perception Neuron 32 Edition v2” motion sensor to capture the skeletal data delivered at a frequency of 120 Hz.

Skeleton data comprises :

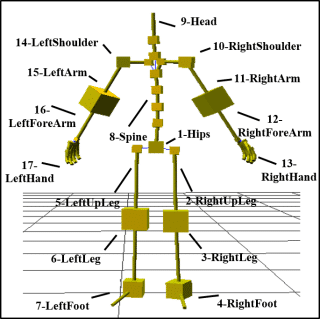

- The 3D locations (Tx, Ty and Tz) of 17 major body joints

- The 3 rotations around each axis (Rx, Ry and Rz)

Skeleton data are saved into BVH format files and are stored in the Skeleton/ folder of the InHARD dataset.

Videos modalities

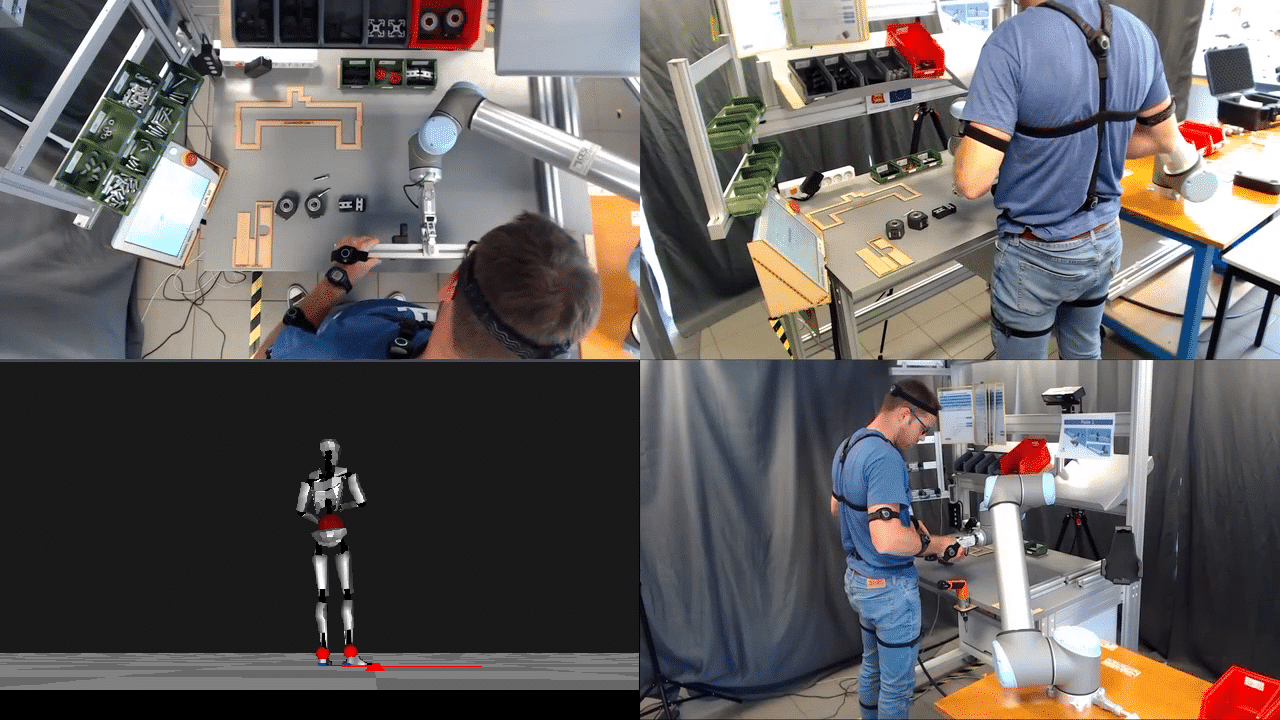

We used 3 C920 cameras to capture RGB Data. Each camera captures three different views of the same action. For each setup, two cameras were placed at the same height but at two different horizontal angles: -45° and +45° to capture both left and right sides. The third camera is placed on top of the subjects to capture the top view.

- Camera 1 always observes top views and is displayed on the top left quarter of the RGB video.

- Camera 2 observes left side views and is shown on the top right quarter of the RGB video.

- Camera 3 observes right side views and is displayed on the bottom right quarter of the RGB video as shown in figure above

RGB files are stored in the RGB/ folder of the InHARD dataset.

Actions classes

The list of 13 meta-actions and 74 actions classes are available in the Action-Meta-action-list.xlsx file

Inside the InHARD.zip datatset, you will find the InHARD.csv file which provides a dataframe with all dataset info including Filename, Subject, Operation, Action low/high level label, Action start/end, Duration etc. in order to facilitate the dataset handling and use.

We used a software called ANVIL to label our data. You can install it if you want to edit, add or remove actions from actions’ labels files (.anvil) situated at the /Labels/ folder.

Experiments and performance metrics

We propose a set of usage metrics of the InHARD dataset for future utilization. Firstly, we suggest dividing data into two levels; experts and beginners according to subject’s expertise with the manipulation. Thereby, all subjects performing the whole manipulation in less than 6 minutes as average total actions’ duration, are selected as experts. The remaining subjects are categorized as beginners. We define the training and validation sets as follows :

- S_train={P01_R01, P01_R03, P03_R01, P03_R03, P03_R04, P04_R02, P05_R03, P05_R04, P06_R01, P07_R01, P07_R02, P08_R02, P08_R04, P09_R01, P09_R03, P10_R01, P10_R02, P10_R03, P11_R02, P12_R01, P12_R02, P13_R02, P14_R01, P15_R01, P15_R02, P16_R02}

- S_val={P01_R02, P02_R01, P02_R02, P04_R01, P05_R01, P05_R02, P08_R01, P08_R03, P09_R02, P11_R01, P14_R02, P16_R01}

PS : Samples in bold are selected as Experts. The remaining are selected as beginner

Citations

To cite this work, please use:

@InProceedings{inhard2020ichms,

author = {Mejdi DALLEL, Vincent HAVARD, David BAUDRY, Xavier SAVATIER},

title = {An Industrial Human Action Recogniton Dataset in the Context of Industrial Collaborative Robotics},

booktitle = {IEEE International Conference on Human-Machine Systems ICHMS},

month = Postponed due to COVID-19,

year = {2020},

url = {https://github.com/vhavard/InHARD}

}