Oasis – Robotics & artificial intelligence for industrial risk management in hostile environments

- Project leader: CESI LINEACT

- Call for projects: Métropole Rouen Normandie – Platform Facility

- Total project budget: €334,240k

- CESI project budget: €334,240k

- Project launch: October 1, 2022

- Project duration: 24 months

The OASIS project, “robOtics & Artificial intelligence for induStrial rIsk management in hoStile environments,” is entering its operational phase with the arrival of the SPOT robot at the CESI Campus in Rouen !

OASIS is the winner of the 2022 “Platform” call for projects launched by the Rouen Normandy Metropolis and supported by the Normandy Energies sector. It aims to extend the Future Factory demonstrator at the CESI campus in Rouen to industrial risk management issues and its scope to the energy industry.

To this end, in February 2023, the CESI Campus in Rouen acquired the SPOT quadrupedal robot from Boston Dynamics. Its morphology, similar to that of a dog, makes it a particularly agile robot for operating in areas that are risky, hostile, and difficult for humans to access, for missions involving inspection, collection, processing, and analysis of information. Its articulated arm allows it to perform actions and interact with its environment (e.g., opening/closing a valve, moving an object, etc.).

Several actions are planned as part of OASIS:

- Supporting the digital transformation of companies in the energy industry to meet industrial risk management challenges. This will be achieved through the establishment of a Technology and Applications Advisory Committee made up of stakeholders in these sectors, awareness-raising workshops, training on the SPOT robot, and the implementation of a proof of concept evaluated in real-life situations.

- Extend CESI LINEACT‘s current research activity, combining virtual models and real environments for manufacturing workshops, to the issues of industrial risk management in harsh environments.

Achievements as of December 31, 2024 (end of the project):

- We continued our work on integrating AI bricks into 6D pose recognition and estimation for the SPOT robot (an extension of the COLIBRY project). As part of this work, we conducted a new scientific review of recent approaches in the literature and identified YOLOV5-6D.

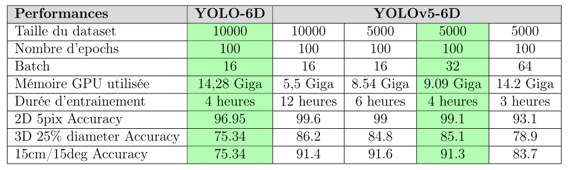

- We migrated our existing work to this new approach and compared the performance of YOLO-6D and YOLOV5-6D on our industrial object datasets (see Table 1).

- We developed the SPOT robotic arm simulator. The latter does not appear in the ROS software we use to program robotic tasks, particularly in the RViz simulation interface. This is a major obstacle, as we can only simulate part of the robot’s behavior: its movements in an environment. Actions involving its arm will not be simulated. To overcome this problem, we modeled SPOT’s articulated arm using a URDF (Unified Robot Description Format) model by defining:

o Each part of the arm (Links) acts as a link with specific dimensions, materials, and visual representations to simulate the actual geometry of the arm. The joints are also defined with limits for each one so that the virtual articulated arm correctly simulates SPOT’s physical arm.

o The inertial and physical properties of the links are finally defined, such as mass or center of gravity, which increases the accuracy of physical simulations.

- Once defined, this URDF model is then integrated into the ROS software as a package, which has several advantages:

o Real-time joint control: Using the ROS control and joint_state_publisher packages, the arm’s joints can be manipulated in real time. Commands sent to SPOT are translated into joint movements that are reflected both in the URDF model and on the physical robot.

o State publishing: The joint_state_publisher node allows the state of each joint to be published in real time. This is essential for accurate visualization in RViz, where joint movements must correspond to the physical movements of SPOT’s arms.

SPOT and its robotic arm are visualized in RViz (see Figure 1). This allows users to see a virtual representation of the arm and robot in their workspace, with each link and joint moving according to real-time commands. Using the ROS MoveIt and rviz_motion_planning plugins, users can plan and execute trajectories and visualize arm movements in RViz. Visual feedback in RViz makes it easy to detect discrepancies between planned and actual movement, allowing for precise adjustments.

Several tests were carried out by moving the SPOT robot arm according to different patterns. Real-time movement validation was achieved by comparing the physical movements of the arm with the RViz visualization.

- We finally worked on producing technical documentation on the developments made in order to capitalize on the work carried out.

Recruitment during this period:

- Tanny DAMET, Master’s 2 intern (6 months), to support the work carried out by research engineer Ilamparidi SANKAR, particularly on the integration of AI for the SPOT robot for reconnaissance and 6D object detection missions.

- Alexandre DELATOUR, CESI intern (5 weeks) as part of the introductory research internship offered to students in the Grande Ecole Program. This internship focused on creating technical documentation in the form of a “code review” on the software components developed by Ilamparidi SANKAR and Tanny DAMET, with the aim of capitalizing on the work carried out.