Scopes—collaborative semantics for evidentiary perception of situations

- Partners: CESI LINEACT, LITIS, IRSEEM

- Call for projects: ANR ASTRID Robotics 2021

- CESI project budget (funding): €210k (€105k)

- Overall budget (funding): €547k (€300k)

- Project launch: January 1, 2022

- Project duration: 39 months

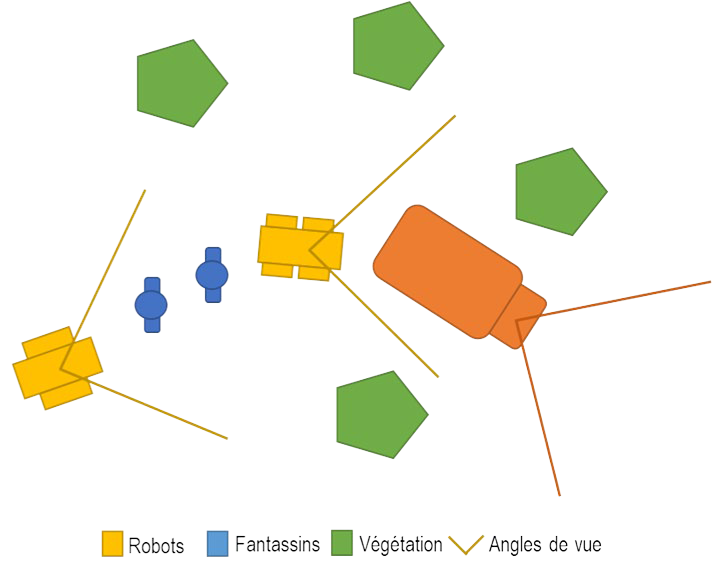

The fields of Industry 5.0 and defense are increasingly relying on systems of systems where robotic agents must adapt to the humans with whom they interact. The use of heterogeneous fleets of agents equipped with perception devices is a godsend that, after merging individual information, to propose solutions to problems such as optimizing fleet operation, securing convoys, improving safety and security for human operators, and increasing flexibility following the reconfiguration of situations or the environment.

The pooling of information makes it possible to produce a global view of the situation based on the individual perceptions of each robotic or non-robotic agent. Each individual perception module produces an interpretation of the scene that is inherently subject to uncertainty. The consequences of deploying the fleet in complex or hostile environments must also be considered. The communication link required for information exchange is subject to bandwidth, which can be very limited or even non-existent when the link is broken, even temporarily. The positions of the viewpoints needed to create the situational view are also dependent on the quality of information from location sources when available.

The SCOPES project proposes to develop a solution for producing an augmented situational view of uncertainty as a source of decision-making information. The project’s contributions will be:

- A formal representation of the situational view, integrating the various sources of uncertainty, allowing for human interpretation.

- A robust localization method based on the graph paradigm and semantic information provided by each agent,

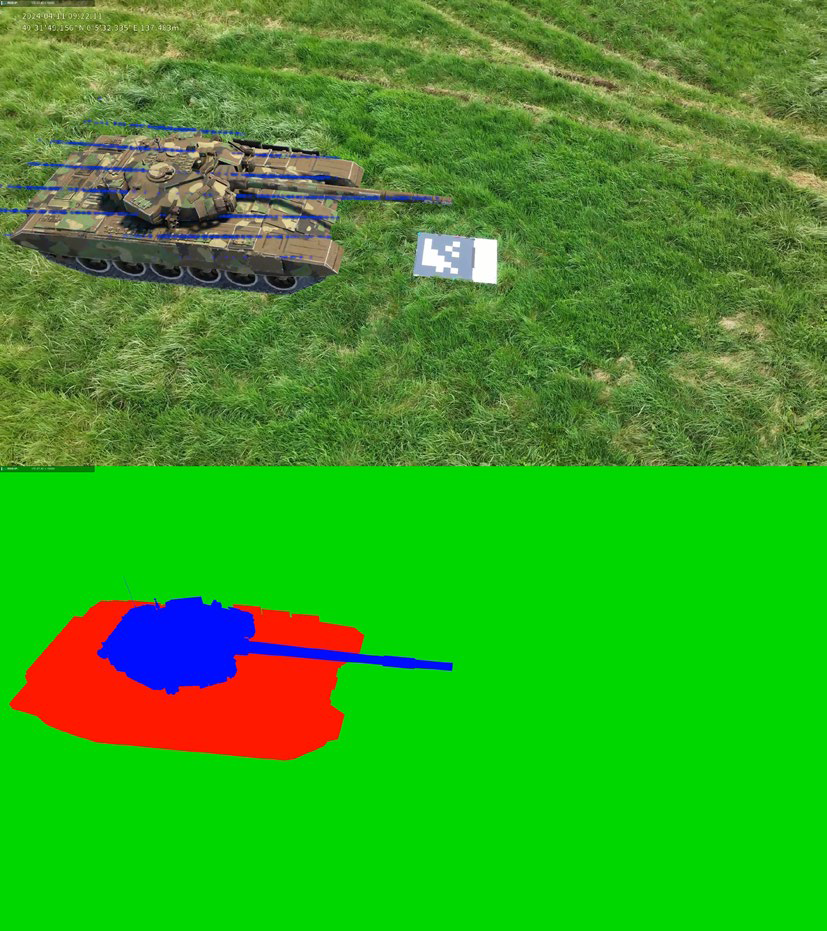

- A functional specification and associated datasets for objective and quantitative evaluation of collaborative perception situations through the use of the project partners’ remarkable technological platforms.

The SCOPES project will result in TRL 4-level outputs. The project’s value to economic stakeholders has been recognized by its certification by NAE (Normandie AeroEspace).

Achievements as of December 31, 2024:

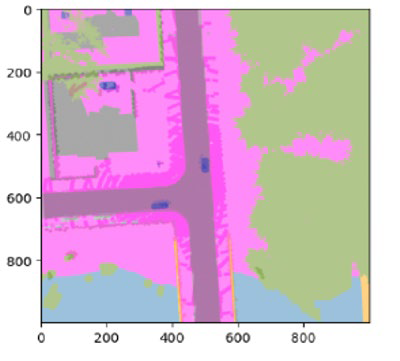

- Formalization and representation of an evidential semantic grid.

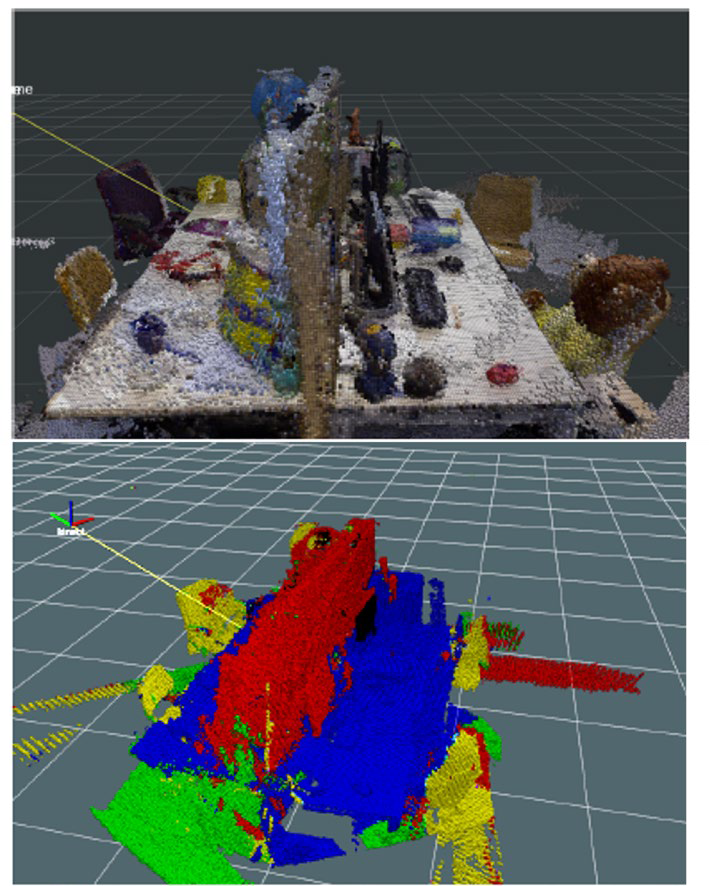

- Robust and lightweight localization and mapping using graphs in complex environments through spatio-temporal consistency.

- Methodology for data production using extended reality.

- Situation overview taking into account sources of uncertainty (HMI).

- Representation, restitution, and visualization of uncertainty in a map for human decision-making.

- Software production:

o Simulated data generation code (CARLA/OpenScenario),

o Code for estimating evidential semantic occupancy grids,

o Code for the SLAM RGBD approach with spatial and temporal consistency on Github,

o Software for multimodal augmentation of RGBD data,

o Software for visualizing evidential semantic occupancy grids in a digital twin.

The project’s closing meeting took place on March 13 at the CESI campus in Rouen, attended by partners and funders, and the project officially ended on March 31, 2025.